Can Bias Be Eliminated from Algorithms?

The predictive software used to automate decision-making often discriminates against disadvantaged groups. A new approach devised by Soheil Ghili at Yale SOM and his colleagues could significantly reduce bias while still giving accurate results.

Organizations rely on algorithms more and more to inform decisions, whether they’re considering loan applicants or assessing a criminal’s likelihood to reoffend. But built into these seemingly “objective” software programs are lurking biases. A recent investigation by The Markup found that lenders are 80% more likely to reject Black applicants than similar White applicants, a disparity attributed in large part to commonly used mortgage-approval algorithms.

It might seem like the obvious solution is to instruct the algorithm to ignore race—to literally remove it from the equation. But this strategy can introduce a subtler bias called “latent discrimination.” For instance, Black people might be more concentrated in certain geographic areas. So the software will link the likelihood of loan repayment with location, essentially using that factor as a proxy for race.

Algorithms can absorb the bias in the larger society, and then reinforce it, says Prof. Soheil Ghili. “You have a model that implicitly says, ‘Those who treated this group with discrimination were right.’”

In a new study, Soheil Ghili, an assistant professor of marketing at Yale SOM, and his colleagues proposed another solution. In their proposed system, sensitive features such as gender or race are included when the software is being “trained” to recognize patterns in older data. But when evaluating new cases, those attributes are masked. This approach “will reduce discrimination by a substantial amount without reducing accuracy too much,” Ghili says.

With algorithms being used for more and more important decisions, bias in algorithms must be addressed, Ghili says. If a recidivism algorithm predicts that people of a certain race are more likely to re-offend, but the real reason is that those people are arrested more often even if they’re innocent, then “you have a model that implicitly says, ‘Those who treated this group with discrimination were right,’” Ghili says.

And if an algorithm’s output causes resources to be withheld from disadvantaged groups, this unfair process contributes to a “vicious loop,” he says. Those people “are going to become even more marginalized.”

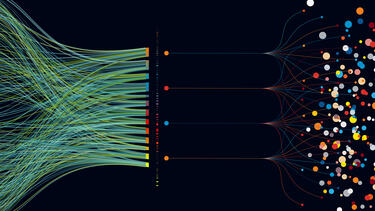

The prediction process carried out by machine-learning algorithms takes place in two stages. First, the algorithm is given training data so it can “learn” how attributes are linked to certain outcomes. For example, software that predicts recidivism might be trained on data containing criminals’ demographic details and history. Second, the algorithm is given information about new cases and predicts, based on similarities to previous cases, what will happen.

Since removing sensitive details from training data can lead to latent discrimination, researchers need to find alternative approaches to reduce bias. One possibility is to boost the scores of people from disadvantaged groups, while also attempting to maximize the accuracy of predictions.

In this scenario, however, two applicants who are identical other than their race or gender could receive different scores. And this type of outcome “usually has a potential to lead to backlash,” Ghili says.

Ghili collaborated with Amin Karbasi, an associate professor of electrical engineering and computer science at Yale, and Ehsan Kazemi, now at Google, to find another solution. The researchers came up with an algorithm that they called “train then mask.”

During training, the software is given all information about past cases, including sensitive features. This step ensures that the algorithm doesn’t incorrectly assign undue importance to unrelated factors, such as location, that could proxy for sensitive features. In the loan example, the software would identify race as a significant factor influencing loan repayment.

But in the second stage, sensitive features would be hidden. All new cases would be assigned the same value for those features. For example, every loan applicant could be considered, for the purposes of prediction, a White man. That would force the algorithm to look beyond race (and proxies for race) when comparing individuals.

“To be clear, train then mask is by no means the only method out there that proposes to deal with the issue of algorithmic bias using ‘awareness’ rather than ‘unawareness’ of sensitive features such as gender or race,” Ghili says. “But unlike most proposed methods, train then mask emphasizes helping disadvantaged groups while enforcing that those who are identical with respect to all other—non-sensitive—features be treated the same.”

The researchers tested their algorithm with three tasks: predicting a person’s income status, whether a credit applicant would pay bills on time, and whether a criminal would re-offend. They trained the software on real-world data and compared the output to the results of other algorithms.

The “train then mask” approach was nearly as accurate as an “unconstrained” algorithm—that is, one that had not been modified to reduce unfairness. For instance, the unconstrained software correctly predicted income status 82.5% of the time, and the “train then mask” algorithm’s accuracy on that task was 82.3%.

Another advantage of this approach is that it avoids what Ghili calls “double unfairness.” Consider a situation with a majority and a minority group. Let’s say that, if everything were perfectly fair, the minority group would perform better than the majority group on certain metrics—for instance, because they tend to be more highly educated. However, because of discrimination, the minority group performs the same as the majority.

Now imagine that an algorithm is predicting people’s performance based on demographic traits. If the software designers attempt to eliminate bias by simply minimizing the average difference in output between groups, this approach will penalize the minority group. The correct output would give the minority group a higher average score than the majority, not the same score.

The “train then mask” algorithm does not explicitly minimize the difference in output between groups, Ghili says. So this “double unfairness” problem wouldn’t arise.

Some organizations might prioritize keeping groups’ average scores as close to each other as possible. In that case, the “train then mask” algorithm might not be the right choice.

Ghili says his team’s strategy may not be right for every task, depending on which types of bias the organization considers the most important to counteract. But unlike some other approaches, the new algorithm avoids latent discrimination while also guaranteeing that two applicants who differ in their gender or race but are otherwise identical will be treated the same.

“If you want to have these two things at the same time, then I think this is for you,” Ghili says.